Email maintenance can be a real “pain in the bane” – every time my wife logs in, her email provider warns that the mailbox is full and needs cleaning. I’m definitely not going to sort through over 5,000 emails spanning 15 years to decide what’s important or spam. My approach is to archive everything locally and delete emails older than two years from the provider. Glad there are tools out there like Bichon .

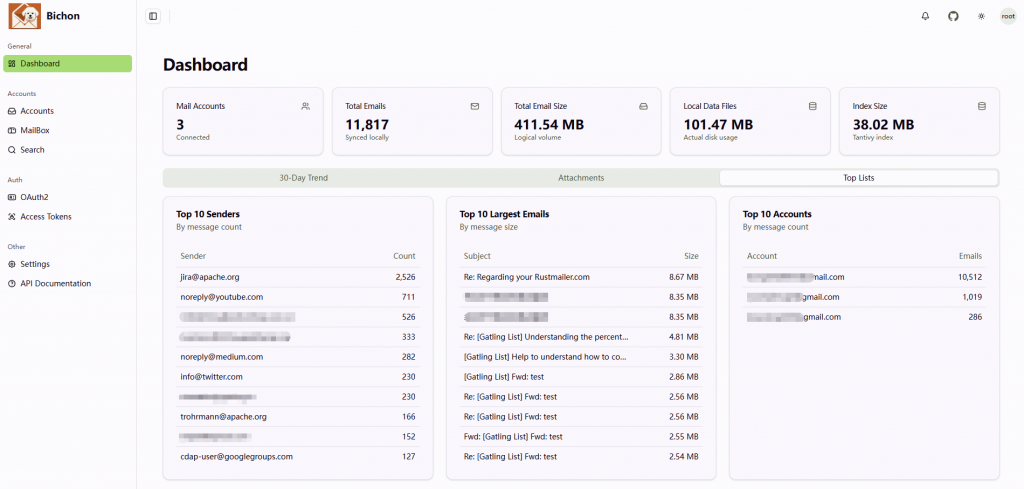

Bichon is an open-source email archiving system that synchronizes emails from IMAP servers, indexes them for full-text search, it is designed for archiving and searching rather than sending/receiving emails.

Bichon data directory explained (TrueNAS)

Bichon stores all runtime data under a single data/ directory. This includes databases, archived mail, and search indexes.

Directory layout

data/

├─ mailbox.db

├─ meta.db

├─ eml/

├─ envelope/

├─ logs/

└─ tmp/

What each part is for

mailbox.db

Main SQLite database. Stores mailbox configuration and account data.

Required for restore.

meta.db

Metadata database. Tracks messages and internal state.

Required for restore.

eml/

Email storage and full-text search index (Tantivy).

Contains raw message data and index files.

Required for restore, though indexes can be rebuilt.

envelope/

Search index for email headers (from, subject, dates).

Not required; can be regenerated.

logs/

Application logs.

Not required.

tmp/

Temporary working files.

Not required.

Bichon eml/ folder, which contains the raw email storage and full-text search index

truenas[/mnt/.ix-apps/app_mounts/bichon/data/eml]$ ll

total 603830

drwxr-xr-x 2 apps 25 Jan 26 15:51 ./

drwxr-xr-x 6 apps 8 Jan 16 15:02 ../

-rw------- 1 apps 791 Jan 26 15:51 .managed.json

-rw-r--r-- 1 apps 0 Jan 16 15:02 .tantivy-meta.lock

-rw-r--r-- 1 apps 0 Jan 16 15:02 .tantivy-writer.lock

-rw-r--r-- 1 apps 253 Jan 26 15:46 39e9e74ead7849aa9741c6e09bafd4e7.fast

-rw-r--r-- 1 apps 123 Jan 26 15:46 39e9e74ead7849aa9741c6e09bafd4e7.fieldnorm

-rw-r--r-- 1 apps 147 Jan 26 15:46 39e9e74ead7849aa9741c6e09bafd4e7.idx

-rw-r--r-- 1 apps 117 Jan 26 15:46 39e9e74ead7849aa9741c6e09bafd4e7.pos

-rw-r--r-- 1 apps 4016 Jan 26 15:46 39e9e74ead7849aa9741c6e09bafd4e7.store

-rw-r--r-- 1 apps 307 Jan 26 15:46 39e9e74ead7849aa9741c6e09bafd4e7.term

-rw-r--r-- 1 apps 1074 Jan 26 15:36 910570110fe84ac596e8438b7679c99c.30934.del

-rw------- 1 apps 1327 Jan 26 15:51 meta.json

.managed.json

- Tracks which email files are currently indexed and managed by Bichon

- Required for proper index consistency

- Small JSON file, automatically updated

.tantivy-meta.lock & .tantivy-writer.lock

- Lock files used by Tantivy (Rust full-text search engine) to prevent concurrent writes

- Not needed for backup; they are recreated automatically

Files with long hex names (e.g., 910570110fe84ac596e8438b7679c99c.*)

- These are search index files for individual email batches

- File types inside each batch:

.store→ actual email content stored for search.idx,.term,.pos,.fast,.fieldnorm→ various search index structures.del→ marks deleted documents

- Required for restore if you want to keep the full-text search working without rebuilding

- Can be rebuilt from

mailbox.db/meta.dbif lost, but rebuilding takes time

meta.json

- Metadata for this email batch

- Required for indexing consistency

Backup recommendation

Back up the entire data/ directory, excluding logs/ and tmp/.

At minimum, ensure mailbox.db, meta.db, and eml/ are preserved.

How to Export

You can reuse Bichon’s archived emails with another app, but there are a few important things to know as Bichon does not have Email export implemented (yet)! Essentially, the .store files inside data/eml/ are Tantivy index files, not raw .eml files. That means other apps cannot read them directly. You have to extract the original emails first.

1.) Setup a python virtual environment ( Creating isolated userland Python virtualenvs in TrueNAS – Infrastructure Blog )

truenas[/mnt/ssd-pool/bichon]$ python3 -m venv venv_bichon --without-pip

truenas_admin@truenas[/mnt/ssd-pool/bichon]$ source venv_bichon/bin/activate

(venv_bichon) truenas_admin@truenas[/mnt/ssd-pool/bichon]$ curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2131k 100 2131k 0 0 3759k 0 --:--:-- --:--:-- --:--:-- 3758k

## NOTE: VENV is activated shown as (venv_bichon), type 'deactivate' to exit! ##

(venv_bichon) truenas_admin@truenas[/mnt/ssd-pool/bichon]$ python3 get-pip.py

Collecting pip

Downloading pip-26.0-py3-none-any.whl.metadata (4.7 kB)

Collecting setuptools

Using cached setuptools-80.10.2-py3-none-any.whl.metadata (6.6 kB)

Collecting wheel

Using cached wheel-0.46.3-py3-none-any.whl.metadata (2.4 kB)

Collecting packaging>=24.0 (from wheel)

Using cached packaging-26.0-py3-none-any.whl.metadata (3.3 kB)

Downloading pip-26.0-py3-none-any.whl (1.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/1.8 MB 12.1 MB/s 0:00:00

Using cached setuptools-80.10.2-py3-none-any.whl (1.1 MB)

Using cached wheel-0.46.3-py3-none-any.whl (30 kB)

Using cached packaging-26.0-py3-none-any.whl (74 kB)

Installing collected packages: setuptools, pip, packaging, wheel

Successfully installed packaging-26.0 pip-26.0 setuptools-80.10.2 wheel-0.46.3

(venv_bichon) truenas_admin@truenas[/mnt/ssd-pool/bichon/venv_bichon]$ pip install zstandard

Collecting zstandard

Downloading zstandard-0.25.0-cp311-cp311-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (3.3 kB)

Downloading zstandard-0.25.0-cp311-cp311-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (5.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 5.6/5.6 MB 12.5 MB/s 0:00:00

Installing collected packages: zstandard

Successfully installed zstandard-0.25.02.) What is required for the script?

The Python library for tantivy was built without Zstd support enabled. Since we can’t easily recompile the Python tantivy library on TrueNAS to enable that feature, we are going to use a “Brute Force” recovery method.

Because the .store file is basically just a giant pile of Zstd-compressed blocks, we don’t actually need the database index to read it. We can “carve” the emails out by scanning the 65f42b91.store file for Zstd magic headers, decompressing them, and checking if they look like emails.

Why this works:

Bypasses the Index: We aren't asking Tantivy for permission anymore; we're just reading the raw bytes off the disk.

Standard Compression: Even if the Python tantivy library wasn't compiled with Zstd, the zstandard library we installed via Pip certainly supports it.

Magic Numbers: Every compressed block in that .store file starts with the same 4 bytes. We are just slicing the giant file at every occurrence of those bytes and trying to unzip them.

#!/usr/bin/env python3

"""

# SPDX-License-Identifier: MIT

#

# Copyright (c) 2026 MRi-LE

#

# This software is provided "as is", without warranty of any kind.

# Authored by Michael Richter, with assistance from AI tools.

"""

import os

import zstandard as zstd

import tarfile

import io

import re

from pathlib import Path

# === CONFIG ===

STORE_DIR = "/mnt/ssd-pool/bichon/eml"

OUTPUT_FILE = "/mnt/ssd-pool/bichon/exported_emails.tar.gz"

ZSTD_MAGIC = b'\x28\xb5\x2f\xfd'

MIN_SIZE = 2000

def main():

store_files = sorted(Path(STORE_DIR).glob("*.store"))

dctx = zstd.ZstdDecompressor(max_window_size=2**31)

total_count = 0

print(f"Creating compressed archive: {OUTPUT_FILE}")

# Open tarball for writing with gzip compression ('w:gz')

with tarfile.open(OUTPUT_FILE, "w:gz") as tar:

for store_file in store_files:

print(f"Streaming from: {store_file.name}")

with open(store_file, 'rb') as f:

data = f.read()

chunks = data.split(ZSTD_MAGIC)

for chunk in chunks[1:]:

try:

decompressed = dctx.decompress(ZSTD_MAGIC + chunk, max_output_size=100*1024*1024)

parts = re.split(b'\n(?=Return-Path:|Received:|From: )', decompressed)

for part in parts:

clean_part = part.strip()

# Apply our proven "Strict Filters"

if len(clean_part) > MIN_SIZE:

if clean_part.startswith((b"Return-Path:", b"Received:", b"From:")):

# Create a file-like object in memory

email_stream = io.BytesIO(clean_part)

# Create header info for the file inside the tar

tar_info = tarfile.TarInfo(name=f"email_{total_count:05d}.eml")

tar_info.size = len(clean_part)

# Add the memory stream to the tarball

tar.addfile(tar_info, fileobj=email_stream)

total_count += 1

if total_count % 500 == 0:

print(f" Archived {total_count} emails...")

except:

continue

print(f"\nSuccess! Total of {total_count} emails streamed into {OUTPUT_FILE}")

if __name__ == "__main__":

main()NOTE: Adjust the OUTPUT_FILE as per your config

3.) Execute and monitor the output

(venv_bichon) truenas_admin@truenas[/mnt/ssd-pool/bichon]$ python3 bichon_mail_export.py

Creating compressed archive: /mnt/ssd-pool/bichon/recovered_emails.tar.gz

Streaming from: 2b5ebeda9eb142719759bf49a1ce6e50.store

Archived 500 emails...

Archived 1000 emails...

Archived 1500 emails...

Archived 2000 emails...

Archived 2500 emails...

Archived 3000 emails...

Archived 3500 emails...

Archived 4000 emails...

Archived 4500 emails...

Archived 5000 emails...

Archived 5500 emails...

Streaming from: 3259b5417e894544919ebc9cc5c9ad1e.store

Streaming from: 35688f9a8f1f467691850201b395eaa4.store

Streaming from: c11b9c7701d24df5b1d5aa1357c61edb.store

Success! Total of 5651 emails streamed into /mnt/ssd-pool/bichon/recovered_emails.tar.gz

4.) You can verify the sanity of the exported eml files by looking into their header.

Therefore i created a inspect scrip as below which comes with header & body search capabilties

#!/usr/bin/env python3

"""

# SPDX-License-Identifier: MIT

# Copyright (c) 2026 MRi-LE

# This software is provided "as is", without warranty of any kind.

# Authored by Michael Richter, with assistance from AI tools.

Bichon Inspect Export Tool: Inspect or Search .eml files inside a .tar.gz archive.

positional arguments:

archive Path to the .tar.gz or .tar file to process

options:

-h, --help show this help message and exit

Inspection Options:

-l LIMIT, --limit LIMIT

Limit the number of files displayed during inspection (default: 10)

Search Options:

-s KEYWORD, --search KEYWORD

Search for a specific word/phrase inside the archive

-b, --body Extend search to the email body (default: headers only)

"""

import tarfile

import re

import argparse

import os

from email.header import decode_header

def decode_mime(text):

try:

decoded_parts = decode_header(text)

result = ""

for decoded_string, charset in decoded_parts:

if isinstance(decoded_string, bytes):

result += decoded_string.decode(charset or 'utf-8', errors='ignore')

else:

result += decoded_string

return result

except:

return text

def process_archive(args):

if not os.path.exists(args.archive):

print(f"Error: File '{args.archive}' not found.")

return

# Prepare search keyword (Case Insensitive)

search_keyword = args.search.lower() if args.search else None

print(f"Opening: {os.path.basename(args.archive)}")

if search_keyword:

mode = "BODY" if args.body else "HEADERS"

print(f"Action: Searching for '{args.search}' (Case Insensitive) in {mode}")

print(f"Limit: Stopping after {args.limit} matches.")

else:

print(f"Action: Inspecting top {args.limit} files")

print(f"{'Filename':<18} | {'Size (KB)':<10} | {'Subject (Decoded)'}")

print("-" * 80)

try:

with tarfile.open(args.archive, "r:*") as tar:

found_count = 0

for member in tar:

if member.isfile() and member.name.endswith(".eml"):

f = tar.extractfile(member)

if f:

# Determine how much to read

read_size = 1024 * 64 if (search_keyword and args.body) else 8192

raw_content = f.read(read_size)

# Convert content to lower case for case-insensitive matching

content_text = raw_content.decode('utf-8', errors='ignore')

content_lower = content_text.lower()

# Filter logic

should_display = False

if search_keyword:

if search_keyword in content_lower:

should_display = True

else:

should_display = True

if should_display:

# Extract Subject only if we are actually displaying the file

subject = "No Subject Found"

s_match = re.search(r'(?i)^Subject:\s*(.*)', content_text, re.MULTILINE)

if s_match:

subject = decode_mime(s_match.group(1).strip())

size_kb = member.size / 1024

print(f"{member.name:<18} | {size_kb:<10.2f} | {subject}")

found_count += 1

# Apply the limit to BOTH inspection and search

if found_count >= args.limit:

break

print("-" * 80)

status = f"Found {found_count} matches." if search_keyword else f"Showed {found_count} files."

print(f"Done. {status}")

except Exception as e:

print(f"Error: {e}")

if __name__ == "__main__":

parser = argparse.ArgumentParser(

description="EML Archive Tool: Inspect or Search .eml files inside a .tar.gz archive.",

formatter_class=argparse.RawTextHelpFormatter

)

parser.add_argument("archive", help="Path to the .tar.gz or .tar file to process")

# Global Options

parser.add_argument("-l", "--limit", type=int, default=10,

help="Limit displayed files or search results (default: 10)")

# Search Options

src_group = parser.add_argument_group('Search Options')

src_group.add_argument("-s", "--search", metavar="KEYWORD",

help="Search for a word/phrase (Case Insensitive)")

src_group.add_argument("-b", "--body", action="store_true",

help="Extend search to the email body (default: headers only)")

args = parser.parse_args()

process_archive(args)NOTE: Make sure you have the virtual environment still loaded or point to the specific python3 binary

(venv_bichon) truenas_admin@truenas[/mnt/ssd-pool/bichon]$ python3 bichon_inspect_export.py recovered_emails.tar.gz

Inspecting top 10 emails inside: recovered_emails.tar.gz

Filename | Size (KB) | Subject (Decoded)

---------------------------------------------------------------------------

email_00000.eml | 44.32 | Jetzt live verfolgen - Ihr DHL Paket kommt heute...

email_00001.eml | 10.44 | Issue kann sich nicht per WiFi verbinden was

email_00002.eml | 35.40 | möchten Sie Ihre Tran

email_00003.eml | 9.32 | Bearbeitete Bewertung von Nobilia

email_00004.eml | 12.91 | wichtige Nachricht in Ihrer Online-Filiale

email_00005.eml | 48.42 | RE: Vorbereitung

email_00006.eml | 61.76 | Geliefert: Ihre Amazon.de-Bestellung

email_00007.eml | 20.38 | Neue Benachrichtigung: Du wurdest im Thema

email_00008.eml | 38.48 | Ihr Amazon Paket liegt am gewünschten Ablageort

email_00009.eml | 37.67 | Eine neue Onlinerechnung liegt vor